-

PROVIDERS

Read more

Browse our latest selection of abstracts, manuscripts, and presentations.

- LIFE SCIENCES

-

PATIENTS

It's About Time

View the Tempus vision.

- RESOURCES

-

ABOUT US

View Job Postings

We’re looking for people who can change the world.

PROVIDERS /// RADIOLOGY

AI-enabled solutions that provide advanced insights based on radiology images

We build solutions that generate actionable insights from radiology images to help providers make more informed diagnostic and treatment decisions.

-

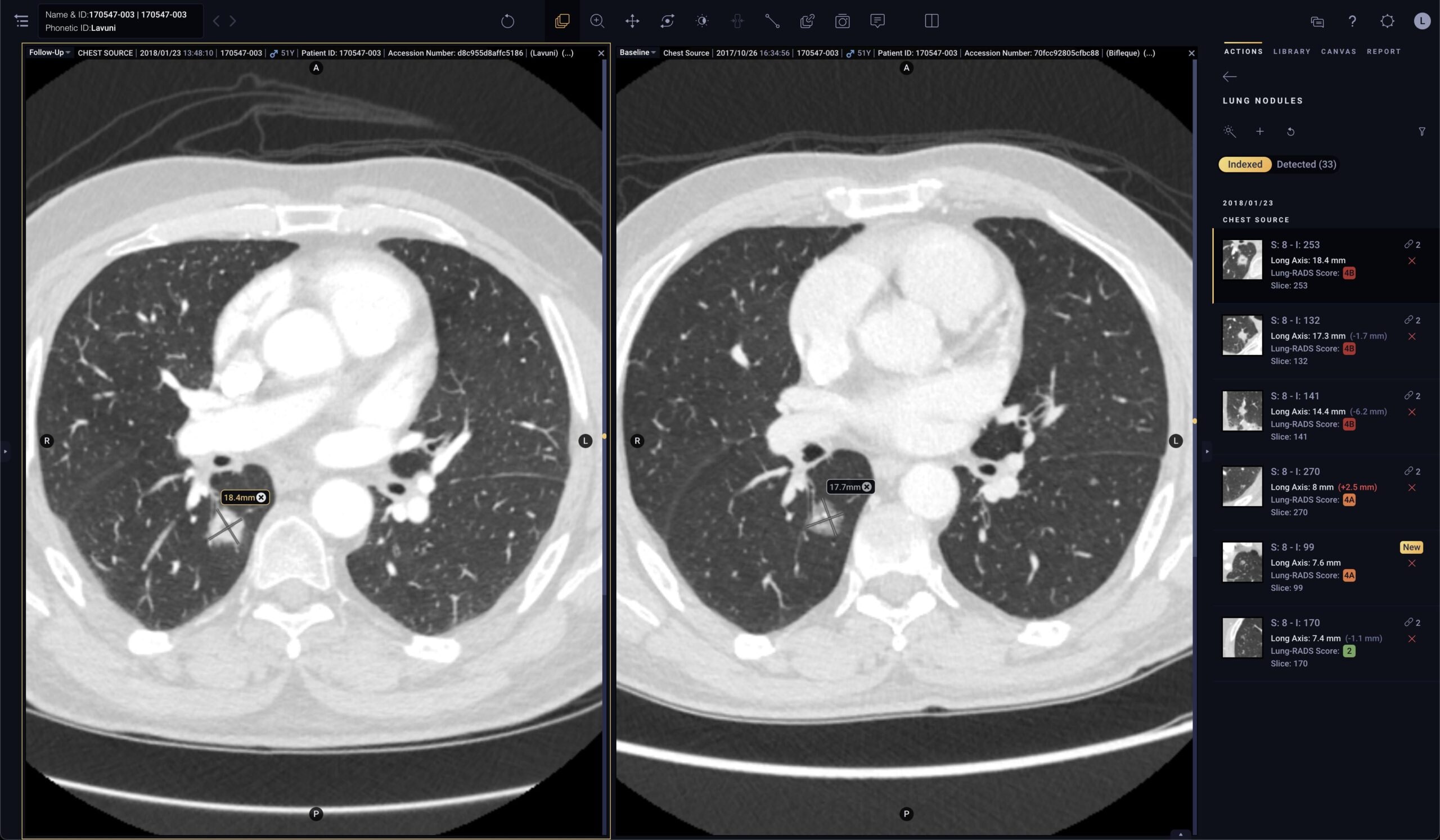

Pixel for Oncology

Solution specifically designed for oncologists to efficiently monitor disease progression

- Automated quantification: Segments and measures lesions of interest, providing accurate contours and long axis and short axis measurements1

- Automated tracking: Links lesions across time points to create longitudinal tracking reports to automatically calculate lesion response

- Automated reporting: Automates therapy response criteria (like RECIST) and generates a comprehensive report that can be linked to the patient record in the EMR or in Tempus Hub

-

Pixel for Radiology

Solution specifically designed to optimize how radiologists read images, detect findings, generate reports, and share insights with referring physicians

- Automated image analysis: One-click segmentation of lesions on CT and MR images

- Automated reporting: Report generated based on AI analysis that can be configured and customized for a more efficient workflow. Equips providers with advanced insights and editable reports based on radiology images, helping to reduce turnaround time and improve reporting consistency

- Seamless integration: Easily integrates AI-enabled tools with existing PACS, EHR, worklist, notification, and dictation systems to optimize workflows

Solution specifically designed for oncologists to efficiently monitor disease progression

- Automated quantification: Segments and measures lesions of interest, providing accurate contours and long axis and short axis measurements1

- Automated tracking: Links lesions across time points to create longitudinal tracking reports to automatically calculate lesion response

- Automated reporting: Automates therapy response criteria (like RECIST) and generates a comprehensive report that can be linked to the patient record in the EMR or in Tempus Hub

Solution specifically designed to optimize how radiologists read images, detect findings, generate reports, and share insights with referring physicians

- Automated image analysis: One-click segmentation of lesions on CT and MR images

- Automated reporting: Report generated based on AI analysis that can be configured and customized for a more efficient workflow. Equips providers with advanced insights and editable reports based on radiology images, helping to reduce turnaround time and improve reporting consistency

- Seamless integration: Easily integrates AI-enabled tools with existing PACS, EHR, worklist, notification, and dictation systems to optimize workflows

-

100+

health systems

-

1.3B+

radiology images

-

50+

algorithms

- One-click lesion segmentation is automated using DeepLook Precise (K202084) and is FDA-cleared but is not available for clinical use in the EU. DeepLook is the manufacturer of DeepLook Precise.

- In the U.S., Tempus Pixel Lung is FDA-cleared (K173542) but is not indicated for lung nodule detection. Arterys Inc is the manufacturer of Tempus Pixel Lung, excluding any third party components described in this list. Lung nodule automated detection and quantification is powered by InferRead CT (K192880) where Infervision is the manufacturer of InferRead CT.

- In the EU, Tempus Pixel Lung is powered by Tempus Pixel and is CE-marked for detection and quantification of nodules. Arterys Inc is the manufacturer of Tempus Pixel Lung, excluding any third party components described in this list.

- Kozuka T, Matsukubo Y, Kadoba T, et al. Efficiency of a computer-aided diagnosis (CAD) system with deep learning in detection of pulmonary nodules on 1-mm-thick images of computed tomography. Jpn J Radiol. 2020;38(11):1052-1061. https://doi.org/10.1007/s11604-020-01009-0

- Conant EF, Toledano AY, Periaswamy S, et al. Improving accuracy and efficiency with concurrent use of artificial intelligence for digital breast tomosynthesis. Radiology: Artificial Intelligence. 2019;1(4):e180096. https://doi.org/10.1148/ryai.2019180096

- Tempus Pixel Breast is FDA cleared (K192437) and CE marked; detection of lesions suspected of breast cancer is powered by ProFound AI (K203822). Arterys Inc is the manufacturer of Tempus Pixel Breast, excluding any third party components described in this list. iCAD is the manufacturer of ProFound AI.

- Tempus Pixel Cardio is FDA-cleared (K203744). Arterys Inc is the manufacturer of Tempus Pixel Cardio, excluding any third party components described in this list.

- Atkins MB. Integration of 4D-Flow into routine clinical practice of congenital and non-congenital cardiac MRI-18 months experience demonstrating decreased scan times, physician monitoring, and patient breath hold times. [366897]. CMR 2018; pp. 789-790.

- Narang A, Miller T, Ameyaw K, et al. A machine learning algorithm for determination of left ventricle volumes and function: comparison to manual quantification. J Am Coll Cardiol. 2019;73(9):1638. Doi: https://www.jacc.org/doi/full/10.1016/S0735-1097%2819%2932244-2